Here’s a statistic that should both terrify and excite every CTO: Stanford’s 3-year study of over 100,000 engineers found that while AI coding assistants can boost productivity by 50% on simple projects, they can actually decrease productivity by 10% on complex, legacy systems. In just 36 months, we’ve gone from “AI will replace all developers” to “AI might be making some developers worse.” The truth? We’re in the middle of the most consequential shift in software development since object-oriented programming—and most teams are getting it wrong.

The Dawn of AI-Powered Coding Assistants and Their Revolutionary Impact

The journey of AI in software development began with a significant “shake the world” moment, marked by the emergence of tools like ChatGPT and the groundbreaking GitHub Copilot. During this period, particularly from 2022 to 2025, AI-based code generation rapidly transitioned from a mere novelty to a near-ubiquitous presence in software development. The core promise of these early tools was to revolutionise how developers create, test, and deploy applications by automating common operations, offering feedback, validating dependencies, and enforcing best practices. GitHub Copilot, a pioneer in the field, leveraged OpenAI Codex/GPT models to provide rapid, multi-line code suggestions.

Developers using such tools experienced instant code generation and inline auto-completion as they typed. ChatGPT further extended assistance through a chat-based interface, enabling developers to ask technical questions, discuss code, check for errors, and generate code from natural language prompts. This immediate utility translated into tangible benefits, with rigorous studies reporting that AI-assisted pair programmers could boost task completion by approximately 26% and significantly reduce coding time for certain projects, in some cases halving it.

Developers also reported staying “in flow” longer, offloading boilerplate work, and a 73% reduction in frustration. Tools like Pieces Copilot even began to automatically identify and explain errors without explicit prompts, demonstrating early intelligence in code review. This initial phase solidified AI coding assistants as powerful catalysts for developer productivity and throughput, with some early adopters experiencing a 20–50% increase in code output and substantial time savings.

The Reality Check: Where AI Wins and Where It Fails

Probable AI Coding Assistant Productivity Impact

Greenfield Projects (New Code)

- Simple Tasks: +30% to +40% productivity

- Popular Languages (Python/JS): +25% to +35%

- Boilerplate/Testing: +40% to +50%

Brownfield Projects (Legacy Code)

- Complex Systems: 0% to -10% productivity

- Large Codebases (1M+ lines): +5% to -5%

- Niche Languages (Cobol/Haskell): +5% to -15%

Net Result: +15% to +20% average productivity

(After accounting for AI-bug rework time)

Above is based on multiple industry studies, including Stanford’s 100K+ engineer analysis

The Three-Year Evolution Timeline:

- 2022: GitHub Copilot launches → “AI will 10x developers”

- 2023: Reality sets in → “AI introduces bugs we have to fix”

- 2024: Specialisation emerges → “Different AI tools for different problems”

- 2025: Agentic AI arrives → “AI that codes, tests, and debugs autonomously”

Confronting Realities, Addressing Challenges, and Fostering Specialisation

As AI code generation tools became more deeply integrated into developer workflows, the initial widespread optimism began to be refined by real-world data and a growing awareness of their limitations and complexities. While AI has undeniably increased the volume of code generated, studies, such as Stanford’s three-year study involving over 100,000 engineers, have revealed that a significant portion of this “new code” often requires rework—specifically, fixing bugs introduced by AI-generated code. This meant the average productivity gain, when accounting for this rework, was a more modest 15% to 20% across all industries.

The effectiveness of AI was also found to be highly variable, depending heavily on factors such as task complexity, with higher gains (30-40%) for simpler, “greenfield” tasks – where author’s own experience is about 70%, but significantly lower gains (0-10%) for high-complexity, “brownfield” projects, sometimes even decreasing productivity. Language popularity also played a role; AI struggled with less common languages, such as Cobol, Haskell, or Elixir, where it could even reduce productivity due to inaccurate suggestions. Furthermore, the study noted that as codebase size increased, the productivity gains from AI decreased sharply, partly due to limitations in the context window and challenges in achieving a suitable signal-to-noise ratio.

Beyond technical limitations, critical concerns emerged regarding over-reliance and potential skill stagnation, especially among novice programmers who sometimes accepted AI-generated code without full comprehension, hindering their development of essential programming skills. This “drifting” behaviour and the effort of “shepherding” the AI through crafting specific prompts highlighted new interaction challenges. Concurrently, significant ethical, privacy, and security considerations became prominent. The potential for data privacy and accidental information leaks (as seen in the “Samsung fiasco”) raised alarms, emphasising the need for tools that restrict access to sensitive data and ensure GDPR compliance.

Issues of intellectual property rights emerged, questioning the ownership of AI-generated content and the risk that models trained on copyrighted data could generate infringing code. The problem of bias and fairness in AI models trained on imperfect datasets, as well as the potential for misinformation and disinformation, necessitates robust mitigation strategies. In response, the market saw the development of specialised, enterprise-grade solutions. Companies like Tabnine prioritised privacy and on-premises deployment, offering air-gapped operations and models trained only on permissively licensed code to avoid IP contamination. Other tools, such as Sourcegraph and Cody, focus on deep codebase comprehension by indexing entire repositories to provide more relevant, repository-aware answers, especially for large and complex codebases.

Cursor, built on VS Code, offered an “AI-native” editor experience, aiming for deeper AI integration than simple plugins. The continued emphasis, as articulated by experts, was on human oversight, viewing AI as a tool to enhance developers and testers, not replace them, shifting roles to include more review and comprehension of AI-generated code.

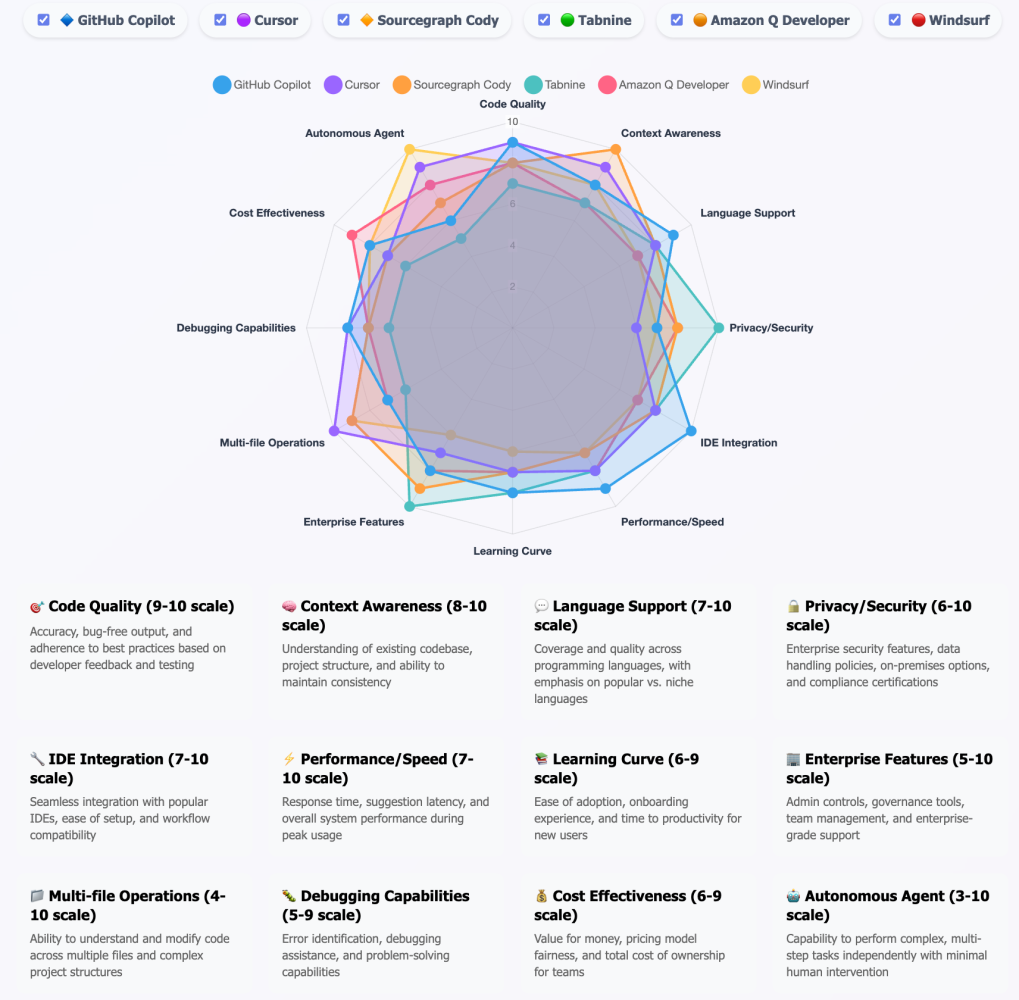

Below is a quick summary of various tools that are making vibes. If you would like to include any other tool, please feel free to DM.

The Future of AI as an Integrated, Autonomous, and Responsibly Governed Co-developer

The trajectory of AI in software development points towards a future where AI becomes an even more integrated, intelligent, and agentic co-developer, capable of increasingly autonomous actions, yet always guided by human oversight and robust governance. The frontier of this evolution is agentic behaviour, allowing AI to plan and execute multi-step development tasks autonomously. Tools like Cursor’s “Agent” feature and Windsurf’s “Cascade” agent demonstrate this by interpreting high-level instructions, reading and writing changes across multiple files, running tests, observing errors, and debugging in an iterative loop until success. GitHub Copilot also moved in this direction with its “Agent mode” in preview, designed to handle multi-step tasks across files with minimal user intervention. To achieve this, tools are developing deep codebase comprehension, leveraging extensive indexing and graph analysis to “know your repo”.

Sourcegraph Cody, for instance, built on Sourcegraph’s code search index, can answer complex questions about an entire codebase, providing explanations with links to specific code references, making it invaluable for understanding legacy code and developer onboarding. Furthermore, multi-modal inputs and broader workflow integration are emerging. Windsurf and Cursor support drag-and-drop UI design images to generate corresponding code, pushing the boundaries of AI’s creative utility. AI is being woven into the entire development lifecycle, extending beyond mere code generation to include testing and debugging (generating unit tests, analyzing failures, suggesting fixes), code refactoring and documentation (suggesting large-scale refactors, improving code quality, generating detailed documentation), and even CI/CD pipelines (automating code analysis, suggesting pipeline changes, generating pull request descriptions).

Amazon Q Developer specifically bridges coding with cloud operations, analysing cloud bills, checking resource statuses, and diagnosing Lambda timeouts, acting as a “DevOps copilot”. Central to this future is a profound commitment to responsible AI and robust governance. This includes establishing clear human oversight and accountability mechanisms, ensuring transparency and explainability through methods such as AI watermarking and documentation to clarify AI decision-making, and actively implementing bias mitigation and fairness strategies through diverse training datasets, regular audits, and resampling techniques.

Data security and privacy remain paramount, with adherence to robust laws such as GDPR, and ensuring that code is not used for training without explicit consent. The long-term vision casts AI assistants as invaluable team members that require “onboarding, guidance (policy), and monitoring—much like a human hire”. This balanced approach aims to maximise AI’s benefits, such as faster development, improved code consistency, and developers focusing on creative tasks, while effectively mitigating risks to ensure improved productivity, higher code quality, and a more empowered engineering team. The market is projected for significant growth, with AI coding assistants becoming a standard, universally adopted tool in the evolving landscape of software development.

Strategic Takeaways: Your AI Coding Survival Guide

For Developers: Master the “AI Sandwich”

- Use AI for: Boilerplate, unit tests, documentation, greenfield prototypes

- Keep human control: Architecture decisions, complex business logic, code reviews

- Avoid the trap: Don’t become an “AI code reviewer” who can’t code without assistance

- Level up: Learn prompt engineering like you learned Git—it’s now a core skill

For Engineering Leaders: Build the Right AI Stack

- Privacy-first environments: Tabnine for sensitive codebases

- Complex legacy systems: Sourcegraph Cody for deep repo understanding

- AI-native teams: Cursor for integrated development experiences

- Governance is non-negotiable: Set clear AI policies before, not after, adoption

For CTOs: Prepare for the Paradigm Shift

- Budget reality: aim for 15-20% productivity gains to start with, not the promised 50%

- Hiring evolution: Seek “AI shepherds” who can collaborate with and validate AI

- Risk mitigation: Implement IP protection, bias auditing, and code quality gates

- Culture shift: AI collaboration is now as important as team collaboration

The Big Question That’s Dividing Engineering Teams

Here’s what’s keeping CTOs awake at night: Should junior developers be allowed to use AI coding assistants, or does it create a generation of developers who can’t actually code?

Team A argues: “AI is like Stack Overflow on steroids. It accelerates learning and lets juniors contribute faster.”

Team B counters: “We’re creating code junkies who copy-paste without understanding. When AI fails, they’re helpless.”

What’s your take? Are we building better developers or better AI-dependent code generators?

Share your thoughts below—this debate is reshaping how we approach software engineering education and career development.