Introduction

As enterprises move beyond experimentation and into large-scale deployment of agentic platforms, one reality has become unavoidable: traditional software pricing and commercial models are no longer fit for purpose. Agentic systems do not behave like SaaS tools, they replace labor, execute decisions autonomously, and embed themselves deeply into the operations of organizations. Services and software providers, be prepared to quickly evolve your traditional SaaS pricing models and systems’ integration pyramid structures.

This paper offers an experience-driven perspective on how pricing, services, and commercial architectures must evolve to support this shift. Drawing on real-world experiences over the past several years from enterprise AI deployments, forward-deployed engineering models, and outcome-driven transformations, it outlines pragmatic approaches for software companies, startups, service providers, and enterprise buyers alike.

The goal is not theory, but clarity: how to structure commercial models that fund complexity, align incentives, scale with autonomy, and ultimately unlock sustainable value from agentic platform integration.

1. Strategic Foundation: The Shift from SaaS to Service-as-Software

The enterprise technology landscape is currently undergoing a structural metamorphosis that renders traditional Software-as-a-Service (SaaS) business models increasingly obsolete. For decades, the dominant economic logic of the software industry has been predicated on the sale of tools, passive instruments that require human operators to generate value. In this paradigm, monetization was logically tethered to access: the “seat.” However, the emergence of Enterprise Generative AI, specifically systems leveraging Ontologies and Agentics to create “Agentic Autonomous Organizations,” represents a transition from tool-making to labor-replacement. This shift necessitates a fundamental reimagining of the business model, moving from selling efficiency to selling autonomy.

For a progressive startup or a large integrator operating at this frontier, the separation between “services” and “product” is no longer a distinct boundary but a fluid continuum. The complexity of deploying autonomous agents, which must navigate the messy, unstructured reality of enterprise data without hallucination, demand a high-touch engagement model. This is not merely a deployment necessity but a strategic advantage.

The integration of Forward Deployed Engineers (FDEs) with a proprietary software stack creates a “technology flywheel” where specific customer problems solved in the field are abstracted into generalizable product features, thereby funding R&D through revenue rather than venture capital.

1.1 The Failure of the Seat-Based Model in an Autonomous Era

The traditional SaaS pricing model, typically defined by a per-user, per-month fee, is fundamentally misaligned with the value proposition of Agentic AI. The primary objective of an autonomous agent is to reduce the necessity for human intervention in workflows. If an “Accounts Payable Agent” successfully automates the reconciliation of invoices, the enterprise requires fewer human accountants. In a seat-based model, the vendor is effectively penalized for their own success: as the software becomes more effective, the customer’s seat count, and thus the vendor’s revenue, declines. This phenomenon, often referred to as “seat compression,” creates a perverse incentive for vendors to limit the autonomy of their systems to protect their revenue streams.

Furthermore, “Agentic Seats” are emerging as a new category. Industry leaders are experimenting with models where AI agents are treated as “digital workers” that possess their own usage quotas, API access keys, and security credentials, distinct from human users. This distinction is critical because digital workers have fundamentally different consumption patterns. A human user is biologically limited to a certain number of interactions per day; an autonomous agent can scale its operations infinitely, constrained only by compute resources and API rate limits. Therefore, pricing strategies must decouple revenue from human headcount and align it with the volume and value of the work performed by the agents.

1.2 The Ontological Imperative

To understand how to build comprehensive Enterprise-level AI-driven business models, one needs to understand the specific reliance on “Ontologies.” This is the central differentiator between a generic “Co-pilot” (which assists a human) and an “Agent” (which acts independently). Large Language Models (LLMs) are probabilistic engines; they do not inherently understand truth or business logic. To function safely in an enterprise context, they require an Ontology a semantic layer or knowledge graph that maps the entities, relationships, and rules of the business.

Building this Ontology is a capital-intensive, high-intellect exercise. It cannot be automated via a “self-serve” signup flow. It requires deep integration into legacy systems (ERPs, CRMs) and the translation of tribal knowledge into machine-readable code. Consequently, the business model must account for the high upfront cost of this ontological construction.

This is where the Forward Deployed Engineering (FDE) model becomes essential. The FDE does not just “install software”; they act as the “Technical Co-Founder” of the client’s autonomous organization, building the ontological foundation that makes the agents viable.

1.3 The Convergence of Services and Software

The strategic objective for any startup or ISV is to execute a “Services-Led Growth” strategy that eventually transitions into high-margin software revenue. Investors typically discount services revenue due to its lower margins and linear scaling constraints compared to the exponential scalability of software. However, in the realm of complex Enterprise AI, this distinction is artificial. Services are the delivery mechanism for the software’s value, a concept which HFS Research refers to as “Services-as-Software”.

Without the FDE to weave the software into the client’s data fabric, the software has much less value. Conversely, without the underlying software platform (the Ontology core, the Agent orchestration engine), the FDE is merely a high-priced consultant with no leverage.

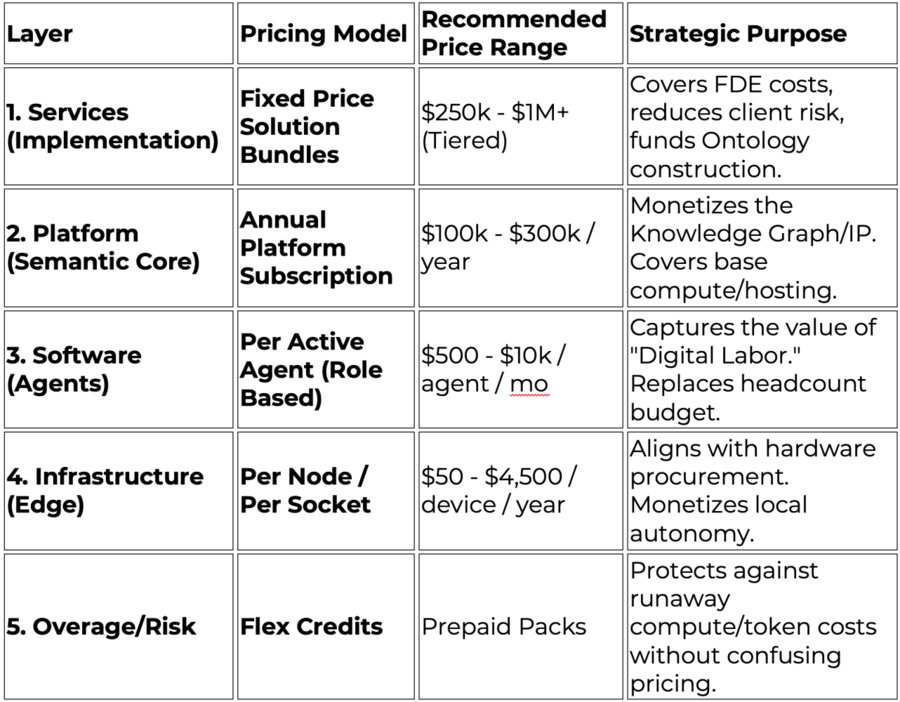

The challenge, therefore, is to design a pricing architecture that:

- Captures the value of the FDE talent without turning the company into a low-margin consultancy.

- Monetizes the Ontology as a premium asset that locks in the customer.

- Prices the Agents based on the labor they replace or the outcomes they generate, ensuring revenue grows as autonomy increases.

- Adapts to Hardware realities when agents are deployed on edge devices or robotics.

The following sections provide an analysis of these components, structured to serve as a blueprint for one’s commercial strategy.

2. The Services Engine: The Forward Deployed Engineering (FDE) Model

The Forward Deployed Engineer (FDE) model is often misunderstood as simply “sales engineering” or “professional services.” In the Palantir context, and for any startup building Agentic Autonomous Organizations, the FDE is a product development role located at the customer interface. The FDE creates the “technological connective tissue” between the abstract capabilities of the AI platform and the concrete, messy realities of the client’s business.

2.1 The Operational Mechanics and Culture of the FDE

The core of the FDE culture is “radical deference to the field.” Unlike traditional implementation teams that are constrained by a rigid product roadmap (“You can’t do that until Q3”), FDEs are empowered to build whatever is necessary to solve the client’s problem now. If the platform lacks a specific connector for a 30-year-old mainframe or a specific visualization for a logistics workflow, the FDE just builds it and asks for forgiveness afterwards.

This autonomy requires a specific talent profile. FDEs are not typical IT consultants; they are full-stack software engineers with high social intelligence and business acumen. They command significant compensation, with total packages in North America ranging from $120,000 to $160,000 for standard roles, and significantly higher for elite performers at top-tier firms. This FDE-driven delivery model puts extreme pressure on the global SIs and offshore firms whose heavy offshore, pyramid-based staffing profiles are misaligned. For the startup, this means the “cost of goods sold” (COGS) for services is high, necessitating premium pricing.

The “Binary Transfer” and the Flywheel

The critical mechanism that prevents the FDE model from becoming a trap is the concept of “Binary Transfer.” This is the process by which custom code written in the field is harvested, generalized, and integrated into the core product binary.

- Field Innovation: An FDE at a shipping client writes a script to optimize container loading based on a new constraint.

- Abstraction: The Product Team identifies that this logic is relevant to 50% of the customer base.

- Integration: The logic is rewritten as a core module of the platform.

- Deployment: The new feature is pushed to all customers.

- Result: The “custom” work done for one client subsidizes the R&D for the entire platform. The startup effectively uses the client’s budget to fund its own product roadmap.

2.2 Services Pricing Models: Analysis and Recommendations

The user query specifically asks for the “typical FDE model being deployed” regarding rate cards, fixed prices, and value-based pricing. The analysis suggests a strategic progression: start with Rate Cards to protect cash flow, move to Fixed Price/Solution licensing to capture margin, and reserve Value-Based pricing for high-trust, mature partnerships. This is a staged model which marks up to very high margin projects once delivery success has been evidenced and customer trust exists.

2.2.1 Rate Cards: Benchmarking the Talent

In the early stages of an engagement, or when dealing with procurement departments that require line-item transparency, the Rate Card model is the default. It ensures that every hour of the FDE’s time is billable, protecting the startup from the “scope creep” inherent in AI projects where data quality is often worse than advertised.

However, standard IT consulting rates are insufficient for FDEs. These are not “staff augmentation” roles; they are “architects of autonomy.” The pricing must reflect this premium.

Table 1: Strategic FDE Rate Card Benchmarks (2025/2026)Table 1: Strategic FDE Rate Card Benchmarks (2025/2026)

Strategic Insight: One should aim to move away from this model as quickly as possible. Rate cards punish efficiency. If an FDE uses the company’s proprietary “Agent Builder” tool to complete a task in 2 hours that would take a normal consultant 20 hours, the Rate Card model reduces revenue by 90%.

2.2.2 Fixed Price / Solution-Based Licensing (The Target Model)

This is the model that is the “best practice” for high-growth AI businesses. Instead of selling “hours,” the startup sells a “Capability” or “Outcome.” The FDE costs are bundled into a high, upfront license fee.

Structure:

- The “Solution Unit”: The contract defines a scope, such as “Deployment of Autonomous Supply Chain Control Tower.”

- The Price: A single line item, e.g., $1,500,000 per year.

- The Bundle: This fee includes the software license, the hosting costs, and “Implementation Services required to achieve the scoped outcome.”

- The Margin Arbitrage: The client pays for the value of the solution. If the startup’s technology is superior, the FDEs might only need to work 3 months to deploy it. The remaining 9 months of the revenue are pure margin. This aligns the solution provider’s incentive (build better tools to deploy faster) with the client’s incentive (get the solution live faster).

Illustrative Pricing Reference:

- Level 0 (Pilot): $325,000. Limited scope, single node.

- Level 1 (Departmental): $650,000. Multiple data sources, moderate complexity.

- Level 2 (Enterprise): $1.3M+. Full cross-functional integration.

This model effectively hides the high cost of the FDEs inside the software license. Importantly, if you are a startup, this maintains the optical illusion of being a “Software Company” rather than a “Consultancy” to investors.

2.2.3 Value-Based / Gain-Share Models

The ultimate evolution of FDE pricing is the “Gain-Share” model, where one takes a percentage of the economic value created. This is particularly relevant for “Agentic” systems that have a direct impact on the P&L (e.g., reducing inventory holding costs, increasing debt recovery, revenue recovery of healthcare claims).

Mechanics of Gain-Share:

- Baseline Establishment: The FDEs and the client agree on a “Baseline” metric (e.g., current cost per claim processed is $15).

- The Uplift: The Agentic system reduces the cost to $5. The “Value Created” is $10 per claim.

- The Split: The startup takes 20-30% of the savings ($2-$3 per claim) for a fixed period (e.g., 2 years).

Pros & Cons:

- Pros: Unlimited upside revenue potential. If the agent saves the client $100M, the solution provider earns $20M far more than any license fee.

- Cons: Extremely difficult to negotiate. Requires “Open Book” accounting from the client. Attribution is messy (e.g., “Did the agent save the money, or did the market price of oil drop?”).

- Consideration: Use this as a “kicker” or bonus structure on top of a fixed fee, rather than the primary revenue source, to mitigate risk.

3. The Semantic Core: Monetizing the Ontology

In the market of 2026, the Ontology is not just an enabler; it is a product in itself. It serves as the “Digital Twin” of the enterprise, providing the semantic grounding necessary for agents to reason.

3.1 The Ontology as a Platform Product

Unlike a vector database (which stores snippets of text), a Knowledge Graph/Ontology stores structured truth. It requires distinct infrastructure and maintenance. Therefore, it should be priced as a “Platform Foundation.”

Pricing the “Semantic Layer”:

- Base Platform Fee: One should charge an annual “Platform Fee” ($100k – $300k) that covers the storage, management, and compute required to maintain the Knowledge Graph. This is the “operating system” for the agents.

- The “Knowledge Maintenance” Subscription: Ontologies are living organisms. Business rules change. One can charge a recurring service fee (e.g., 20% of the initial implementation) to keep the ontology synchronized with the business reality. This ensures that the agents don’t drift into obsolescence.

3.2 Vertical-Specific Ontology Libraries

A significant growth lever is the development of pre-built Ontologies. If the FDEs build a robust “Semiconductor Manufacturing Ontology” for one client, this IP can be licensed to the next client.

- Strategy: Offer “Industry Packs” or “Accelerators” or “Agent Marketplaces”.

- Pricing: A one-time or recurring licensing fee (e.g., $50,000/year) for access to the “Standardized Data Model.”

- Value Prop: This reduces the FDE time by 50-70%, allowing the client to deploy agents in weeks rather than months.

4. Software Pricing Models: The Economics of Digital Labor

Once the FDEs have established the ontological foundation, the core revenue engine, the software agents, must be monetized. There are various commercial models to consider: Pay per agent, Pay per transaction, and Tokenization. Our own experience and research indicate that “Digital Worker” (Per Agent) pricing is the optimal strategy for Enterprise AI, while Tokenization is a trap to be avoided for customer-facing pricing.

4.1 The “Token Trap”: Why Consumption Pricing Fails in Enterprise

The underlying cost of Generative AI is compute/tokens (e.g., GPT-5 input/output tokens). It is tempting to pass this cost directly to the customer with a markup (e.g., Cost + 30%).

- The Unpredictability Problem: Enterprise buyers operate on fixed annual budgets. Token consumption is inherently volatile. An autonomous agent might get stuck in a recursive reasoning loop, consuming millions of tokens in minutes. This creates “Bill Shock,” which is fatal for enterprise relationships.

- The Misalignment: Token pricing penalizes “thinking.” If one improves the agent by adding a “Chain of Thought” reasoning step (which increases accuracy), the cost to the customer increases. The customer is incentivized to use dumber models to save money, which degrades the value of the solution.

- Strategic Recommendation: Never expose raw token pricing to the business buyer. Use tokens as an internal COGS metric, but wrap them in higher-level abstraction.

Note of caution that solution providers must tightly govern their own internal tokenization costs, especially if they are leveraging higher-cost LLM models and more advanced reasoning agents.

4.2 The “Digital Worker” Model: Pay-Per-Agent

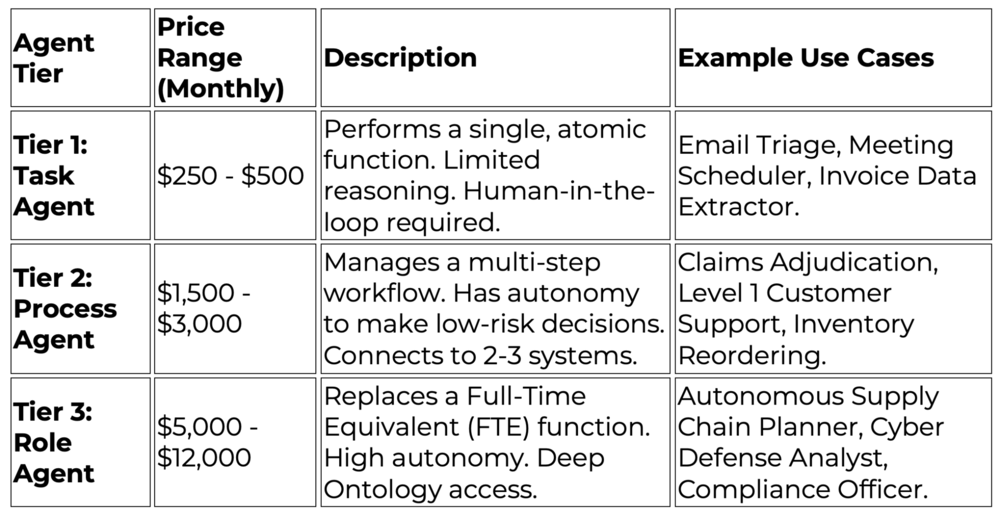

The most conceptually aligned model for “Agentic Autonomous Organizations” is to price the software as Digital Labor.

- Concept: The enterprise is “hiring” an agent to perform a role previously done by a human or to augment a team.

- Pricing Unit: “Per Active Agent” per month.

Tiered Agent Pricing Structure (Hypothetical Model):

Rationale: This creates predictable Annual Recurring Revenue (ARR). It allows the customer to draw a direct ROI comparison: “This Role Agent costs $60k/year but replaces a headcount that costs $120k/year + benefits.” The Role Agent can work 24/7, if viable to the business use case. The arbitrage is clear.

A strong example of this approach can be seen in the CX startup Crescendo.AI. The company charges a monthly fee per software agent, combined with a variable fee based on successfully resolved user interactions. In addition, Crescendo.AI offers an embedded skilled human-agent model at an incremental cost. Together, this represents a progressive, full-stack IT and BPO commercial model, pricing digital agents, human agents, and outcomes in a single integrated framework.

It will take time, but this is the inevitable direction of tomorrow’s business world: organizations will pay for humans and agents, across both hardware and software, based on the value they deliver.

4.3 The “Flex Credit” Model: Solving the Variability Issue

To handle the variable compute costs (tokens) while keeping the “Per Agent” price stable, the solution provider should consider adopting the “Flex Credit” model used by Salesforce and other Big Techs.

- Mechanism: The subscription includes a “Base Allowance” of credits (e.g., 10,000 credits/month).

- Burn Rate: Different actions consume different amounts of credits. A simple query is 1 credit; a complex reasoning task is 50 credits.

- Overage: If the agents work overtime, the customer buys “Top-Up Packs” of credits.

- Benefit: This provides the predictability of a subscription with the upside protection of usage-based pricing. It creates a “soft ceiling” rather than a hard limit.

4.4 Outcome/Transaction-Based Pricing

For high-volume, transactional verticals, pricing per “Successful Outcome” is highly attractive but carries risk.

- Intercom Fin Model: Charges $0.99 per resolved conversation. If the AI fails and hands off to a human, the customer pays nothing.

- Applicability: Best for “Contact Center” or “Ticket Processing” agents where the definition of “success” is binary and indisputable (e.g., Ticket Closed).

- Risk: In complex B2B workflows (e.g., “Optimize Supply Chain”), “success” is harder to define. Did the agent optimize it, or did demand slow down? Outcome pricing here creates friction over attribution.

5. Physical Autonomy: Pricing for Edge and Hardware

The user specifically asks: “Pay per size of hardware device running our software?” This is a critical question for “Autonomous Organizations” that involve robotics, factories, or physical infrastructure. The pricing dynamics here differ from the Cloud because compute is constrained and the software is often “shipped” to the device.

5.1 Per-Device / Per-Node Licensing

For agents deployed on robots, drones, or IoT gateways, the standard model is a per-device license.

- The Metric: License fee per “Node” or “Endpoint.”

- Pricing Tiers based on Device Size/Power: Micro-Edge (e.g., Cameras, Sensors): $25 – $100 / device / year. These devices run lightweight agents (quantized models) for detection or basic logic. Standard Edge (e.g., AGVs, Kiosks): $500 – $1,500 / device / year. These devices run full local agents capable of navigation and task execution. Heavy Edge (e.g., Factory Controllers, Autonomous Vehicles): $5,000 – $20,000 / device / year. These run the “Brain” of the autonomous operation, coordinating multiple sub-agents.

5.2 Per-Socket / Per-Core Pricing (On-Premise Infrastructure)

If the software is running on the client’s on-premise servers (e.g., a private data center for a bank or defense contractor), the pricing must align with hardware procurement standards.

- The Nvidia/VMware Precedent: The industry standard is to price by CPU Socket or GPU Pair.

- Benchmark: Nvidia AI Enterprise charges approximately $4,500 per GPU socket per year.

- Strategy: The startup should mirror this. “One Agentic Core License” covers up to 2 GPUs or 32 CPU cores.

- Why: This simplifies procurement. The IT department knows how many servers they have; they can easily calculate the software cost. It also scales naturally: as they add more compute power to make the agents smarter, they pay for more licenses.

5.3 Fleet Pricing vs. Device Pricing

For large deployments (e.g., a warehouse with 500 robots), “Per Device” pricing can become administratively burdensome.

- The Fleet License: Offer a “Site License” or “Fleet Tier.” Example: “Up to 500 Active Nodes” for a flat fee of $250,000/year.

- Meili FMS Model: Some fleet management software charges a “Code License” allowing full control and white-labeling, which effectively acts as a massive one-time transfer of IP rights for a high fee, suitable for very large enterprise partners.

6. Growth Strategy: The “Trojan Horse” and The Flywheel

How does one move from a high-touch, expensive service provider to a high-margin software platform?

6.1 The “Trojan Horse” Deployment Strategy

- Entry Point: Do not try to sell “The Platform” on Day 1. It is too big, too scary, and too expensive.

- The Wedge: Sell a specific “Solution” to a burning problem (e.g., “The Sales Account Research Report Agent”).

- The Trojan Horse: To build that specific agent, the FDEs must build a partial Ontology of the factory.

- The Expansion: Once that Ontology exists, building the next agent (e.g., “Sales Proposal Agent”) is 50% cheaper and faster because the data foundation is already laid.

- The Lock-in: As more agents are systematically added, and the Ontology grows richer, a full digital twin software platform of a sales organization can be created. The switching cost for the customer becomes insurmountable because no other vendor has that semantic map of their business.

6.2 Managing the Services-to-Software Ratio

- Year 1: Revenue is 70% Services (FDE), 30% Software. This funds the deployment and learning.

- Year 2: Revenue shifts to 50/50 as the “Platform Fee” kicks in and the initial agents generate recurring revenue.

- Year 3+: Revenue targets 20% Services, 80% Software. FDEs are either rotated to new clients or the client hires their own internal team to manage the platform (paying for training/certification from the startup).

Once again, as fixed priced or gain-share projects are delivered, the allocation of services vs software becomes less obvious vs rate card-based projects. For AI focused startups, from a market valuation viewpoint multiples can be 10x+ higher for software over services, so getting your software ratio as high as possible is imperative.

6.3 Pilot-to-Production Velocity

- The Agentic Pilots: Successfully compress the sales cycle using “Agentic Pilots” 1-5 day intense workshops where customers build live prototypes on their own data. Ditch the Powerpoints and build working solutions fast!

- Strategy: Offer “Agentic Pilots or Bootcamps” (free or low cost) to get the Ontology installed. The “Aha!” moment comes when the customer sees the agent perform a task autonomously on their data. This converts skepticism into budget.

7. Conclusion: The Blueprint for Building Successful AAO Software Projects

The “Agentic Autonomous Organization (AAO)” is not just a software product; it is an organizational transformation. Consequently, the business model must be transformative. Any software solution provider cannot merely sell subscriptions or services; they must sell the capability of autonomy.

By leveraging the FDE model to conquer the complexity of enterprise data, monetizing the Ontology as a strategic asset, and pricing the software as Digital Labor (Per Agent) rather than abstract consumption (Tokens), a company can align its revenue growth with the massive value unlock of the AI era.

The recommended path is a Hybrid Model: Fixed-Price Services to land, Platform Fees to anchor, and Agentic Subscriptions to scale. This structure provides the cash flow to survive the early years if you are a startup, and the exponential upside to dominate the market in the long term.

For risk takers with strong balance sheet partners and consulting leadership: Gain-share Services can drive tremendous profit levels and valuations. While these commercial models have been few and very far between in the past, AI now provides the missing link technology to manage risk and ensure measured outcomes.

Table 2: Summary of Recommended Pricing Stack